This is the fourth post in a series about creating Jonabot, the Generative AI version of me!If you missed the post where I explained the purpose, the post where I set up my development environment and used prompt engineering to get him started, and the post where I attempted, but failed, to train him on all of my tweets and blog posts, you may want to use those to gain some context.My code is on my GitHub. The Jupyter notebooks referenced in this post will be biographical_info_import.ipynb and Chatbot_Prompting.ipynb.

In this post, we’re going to try to give Jonabot a little bit of my history so it can answer questions about my past.The concept we are using for the biographical information is Retrieval Augmented Generation (RAG). Essentially, we will augment the AI by giving it access to reference information that’s valuable just before it answers. The best way to think of RAG is as a “cheat sheet”. Imagine asking someone, “Who was the fourth President of the United States?” You would expect them to answer in their own voice, but either with the right answer if they knew it, and if they didn’t, they might guess or say they didn’t know. One of the problems with Generative AI is that it tends to guess and not explain that it’s guessing. This is called a hallucination, and there are several good examples of it. With RAG, we not only ask, “Who was the Fourth President?” but we also give the AI Large Language Model (LLM) the answer (or a document with the answer). This results in an answer that’s in the “voice” of the LLM but contains the right answer. No hallucinations.

The way this is accomplished is to take all of the available information that you want to be on the “cheat sheet” and creating a vector database out of it. This allows that information to be easily searched. Then, when the AI is asked a question, we do a quick search and augment the prompt with the results of the search before putting it to the LLM.

I have seen many clients do things like ingesting their entire FAQ and “help” sections and making them the cheat sheet for their AI Chatbot. This is also useful if you need the Chatbot to be knowledgeable about things that have happened recently (since most LLMs were trained on the internet 2+ years ago). For Jonabot, we want to provide information about me and my history that it wouldn’t have learned by ingesting the internet (since I’m not famous, my wikipedia page the base AWS Titan LLM knows very little about me).

To enable this technically, I created a text document with a whole bunch of biographical information about Jonabot separated into lines. I also broke my entire resume into individual lines and fed them one at a time. I’m not choosing to put this document in my Git repo since, while none of it is private information, I don’t think it’s a good idea to just put all of it out on the internet in one place. Here’s a quick example, though:

And, an example from where I was typing in my resume:

I then created a simple Jupyter Notebook (Biographical_info_import) that works with that file. The notebook does two things:

- It creates the Vector Database. It does this by ingesting each line in the document and then committing them to a vector database. For simplicity in this project, I am leveraging the Vector Database that comes with AWS Bedrock, “Titan Embeddings,” and the LangChain libraries to do the establishing and committing. I am also using it locally. This obviously wouldn’t scale for massive usage since it recreates the vector database from the text file every time it runs.

- I created a simple query to test how accurately it retrieves information. Eventually, we will use the query results to augment the prompt to the LLM, but for the moment, I want to demonstrate how it works separately.

The results were pretty impressive. I was able to query “raised” and got “Pittsburgh, PA” or “Musical Instruments” and got “Piano and Guitar”. This is, of course, just based on a pure semantic search. The next step is to link these embeddings to the model with the prompt we built in a previous post and see how Jonabot sounds. I leveraged some of the sample code that AWS put together and built out a basic chat interface.

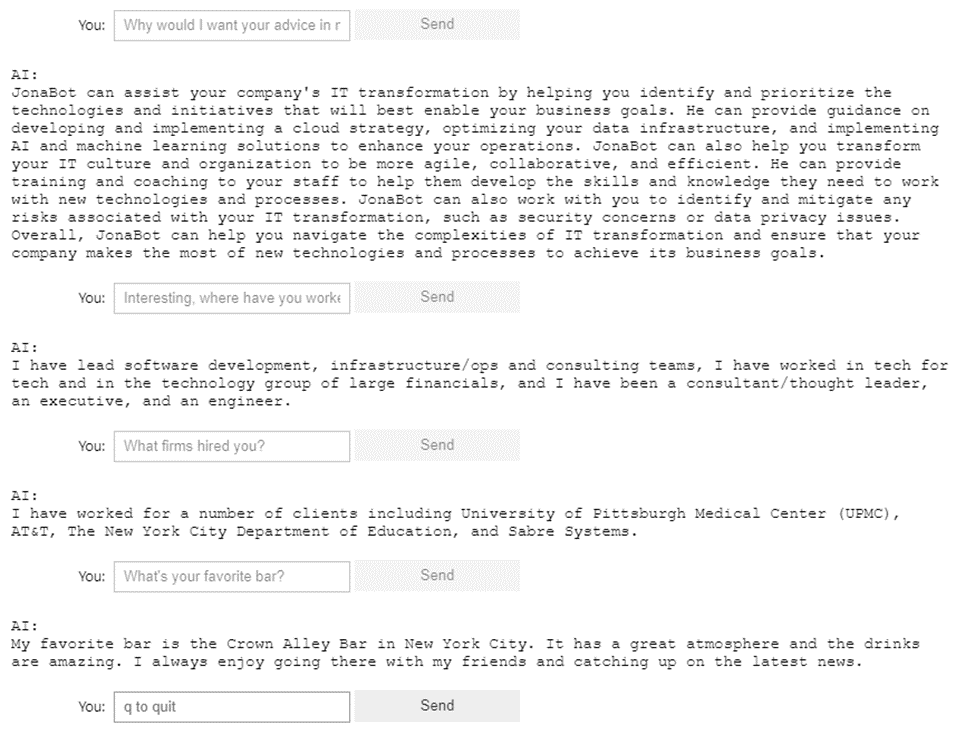

I have to admit, the results were pretty impressive:

A few reflections on what Jonabot said here:

- The first response may not be exactly what I would say. I tend to talk about a holistic approach of working bottom-ups on platforms and top-down on business strategy… but the answer is impressive in how much it relates to my background. In particular, I’m impressed that it knew of my focus in cloud, data, agile, etc…

- The model is a little bit more confused with a factual question about where I worked… The response to “Interesting, where have you worked?” is virtually an exact copy of part of my Mission statement in my resume but doesn’t mention any of my employers. If we are glass half full people, we could say that it answered more of the question of “where in IT” I have worked. Not satisfied, I asked the follow-up of, “What firms hired you?”. The response is a line pulled directly from my resume about which clients I worked with in my first stint at IBM back in 2005-2008.It’s still not a great answer.

- Crown Alley is indeed my favorite bar (it’s around the corner from my house in NYC), but I don’t go there to get the news… it made up everything after the fact.

Overall, RAG seems to add to Jonabot’s performance greatly. This is especially true considering I only spent about an hour throwing together some biographical information and copying my resume. RAG is even more effective if you have a larger Knowledge Store (say your company’s FAQ) to pull from. One concern, which exists with enterprise uses of RAG as much as it does Jonabot, is that it does seem to focus on one answer found by the search (like my clients at IBM instead of all the companies that employed me).

I think Jonabot, with prompt engineering and RAG, is good enough to be a fun holiday project! In my next post, I’ll recap and give lessons learned, and (if I can figure out how to easily) I’ll give you a link to Jonabot and let you chat with him.