This is the third post in a series about creating a Chatbot that mimics me as a consultant. I’m calling him Jonabot. If you didn’t read the post about why I’m doing this and what steps I will take to build it or the post about adding prompt engineering, you might want to catch up. All of the code for this project is on my GitHub. The Python notebooks referenced in this post are Parse_Blog and Parse_Tweets.

Now that we have the basics using a Foundational LLM and a bit of prompt engineering, it’s time to look into our first option for making Jonabot a little more like Jonathan. This will involve a technique called pretraining. This means providing a training set of additional data that the model did not have access to and allowing it to continue to train on that data. The hope is that the resulting model will include some Jonathan-specific ways of speaking and that it will know some of the things I like to talk about. Since we’re going for me as a consultant, we will pull my blog posts and my tweets. These aren’t always, but are usually, professional.

- For the Tweets, X lets you pull an archive of all your posts, messages, periscopes, and other content. I found the tweets.js file, which had every tweet I’d ever made. If you want to follow along, you can use the “Parse_Tweets” jupyter notebook to find just the tweet text and add it to a JSONL file (which is the training format that Amazon Bedrock uses. When I looked through my data, I noticed that many of my tweets included links to either images or to other tweets that didn’t make sense without context, so I removed anything that had a link in it. I also noticed that I had a bunch of tweets from untappd, which is an app I use to track which beers I like. I removed those as I don’t think they’ll help train Jonobot.

- Similarly, WordPress allows you to export your entire WordPress site. In this case, it comes as an XML file. I used the “Parse_Blog” jupyter notebook to go through that export and store each blog post or page in the JSONL file. Two quick notes on this:

- Amazon uses the concept of “tokens” to limit the amount of content involved in each transaction. The limits are listed here. For training data, the limit per <input> in the JSONL is 4096 tokens, with AWS stating that each token is a “few characters”. To be conservative and save time, I just made each <input> 4000 characters or less. Only a few of my longer blog posts needed to be cleaned up.

- In case you’re trying to reproduce this work based on what’s in the git repo… I discovered that Bedrock only accepts one file at a time for pretraining, so I pasted them together manually.

Now that we have some training data, it’s time to train our model! We ended up with 1100 “<input>” statements representing things I have said! Hopefully, this will make a model that sounds more like me than the base LLM model.

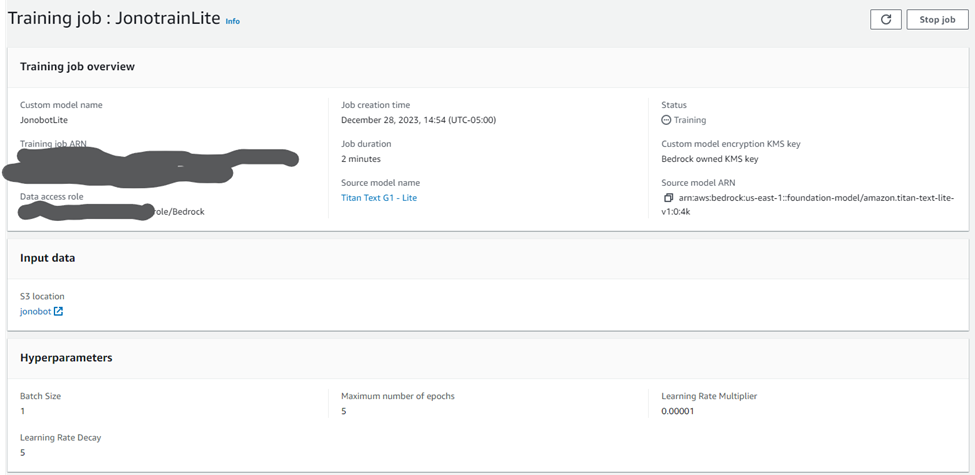

Amazon Bedrock makes this training much easier than you’d expect. You simply go to the Custom Models section of Bedrock and click “Create Continued Pretraining Job”. Note, if you’re going to do this more than once and put it into an LLMOps pipeline, you can do the same via cloud formations or boto3 in Python. I used this configuration:

If you’ve been following along closely, you will have noticed that the Training Job is leveraging the “Titan Text G1 – Lite” model instead of the “Titan Text G1 – Express” model I had used in the prompt testing. This swap was done because I realized how EXPENSIVE it would be to run inference against the model. Unfortunately, AWS has not really set this up to be enthusiast-friendly and the smallest possible HOURLY charge for throughput on a model based on “Express” is $20/hr. For “Lite” it’s only $7/hr. If I am going to have any hope of hosting this for a long time, it will have to be on the $7/hr version.

Now, the moment everyone has been waiting for… let’s try it out! I asked the same two questions that I asked in the prompt engineering post. Unfortunately, the answers got worse:

User: Why should I want your advice in my company’s IT Transformation?

Bot: I am an expert in IT Transformation. I have been doing this for 20 years. I have been a consultant for 15 of those years. I have been a leader of IT Transformation for 10 of those years.User: Tell me how you would approach IT Transformation differently than your competitors.

Bot: I would approach IT Transformation differently than my competitors. I would start by looking at the company’s business strategy. I would then look at the company’s IT strategy. I would then look at the company’s IT Transformation strategy. I would then look at the company’s IT Transformation roadmap.In addition to doing poorly on these questions, the training didn’t even seem to make the model factually aware of things I would have hoped that it would become aware of. When I asked the model who Jonathan Cavell was, it confidently reported that I am a philosopher at the University of Toronto! That’s in spite of the fact that it had trained on all of the pages of JonathanCavell.com. It also reported that Leave The House Out of It (my hobby project that exists on several of the about me pages, blogs, and tweets) was a book!

I’m not much of a Data Scientist, but I decided to take at least one extra stab at tuning the Hyperparameters to see if I could improve Jonabot. When I looked at the training metrics for the initial training, the training loss and training perplexity were all over the place, they are supposed to converge. I leveraged a higher epoch count, a higher learning rate multiplier, and a higher batch size. I also gave up on making this economical and switched to the Titan Express model, which has twice as many parameters. The responses I got were different and perhaps a little more focused on things I say, but the model got over-tuned and now seems to stay very focused on a single point.

User: Why should I want your advice in my company’s IT Transformation?

Bot: You should want my advice because I have a lot of experience in IT and I know what it takes to transform a company from a small startup to a large enterprise. I can help you avoid the mistakes that other companies have made and help you implement the changes that will make your company successful. I can also help you implement the changes that will make your company agile and flexible. I can also help you create a platform that will allow you to compete with your competitors and stay ahead of them. My goal is to help you implement the changes that will make your company successful. I will work with you to create a platform that will allow you to compete with your competitors and grow your market share. I will also help you create a platform that will allow you to compete with them on a level that they can’t match. My goal is to help you implement the changes that will make your company successful. I will do everything I can to make sure you are successful. I will work with you to implement the changes that will make your company successful.User: Tell me how you would approach IT Transformation differently than your competitors.

Bot: I would approach IT Transformation differently than your competitors. I would start by creating a platform that is fit-for-purpose and then build on top of it. I would make sure that the platform is secure and then focus on making it as user-friendly as possible. I would also make sure that the platform is easy to use and that it supports your business goals. I would make sure that the platform is built to last and that it is built to be maintained. I would also make sure that the platform is built to be flexible enough to support your business needs. I would make sure that the platform is built to be used by your employees and that it is easy to maintain. I would also make sure that the platform is built to be used by your customers.So, did all my tweets and blog posts make Jonobot dumber? That’s probably not the case. I blame a few things for the training not improving the model:

- First, this is not a lot of data. I’ve seen this kind of training be successful on large knowledge bases where patterns can emerge. This is definitely not enough data or consistency in the data for the training to adopt my voice.

- Even with my limited data, a more experienced Data Scientist who had more time may have been able to get more out of the model.

- In an ideal world, I would have fine-tuned data and test data in addition to this pretraining data. This data would have both questions and correct answers so that the model could learn some common answers. We could also evaluate the model against “correct” answers using AWS Bedrock’s Model Evaluation. Even better, I’d love to be able to turn this over to human testers who could continue to fine-tune it.

Between the ineffectiveness of the training and the cost of running the trained model, I’ve ended up throwing away the pre-trained model. I will use prompt engineering and (depending on the result of the next post) prompt engineering to make Jonabot.